When the Face Swap app made its debut on Snapchat in 2016, there were, predictably, a lot of funny memes and photos doing the rounds. While it wasn’t a novel feature back then, it was something that people would indulge in out of boredom. Or just for the laughs. Something that comedian Tanmay Bhat got into trouble for, but that’s a different story.

It was all fun and games, even when Nicholas Cage literally became the face of all face-swap jokes. But soon enough, the trend entered a dark territory. Advanced machine learning technology is now being used to create fake pornography with images of celebrities. The pictures and the videos end up looking so real, it’s good enough to fool anybody who isn’t paying close attention to details.

Uploaded without consent, the final image is uncanny. The Guardian reports that there’s a community on Reddit that has spent months creating and sharing these images, which were initially made by the username “deepfake”.

Some of the face-swapped images of celebrities that featured in porn were that of Gal Gadot, Daisie Ridley, Taylor Swift, Scarlett Johansson, and Maisie Williams.

Sample this:

Credits: Motherboard.Vice.com

That’s not Gal Gadot, but an image of hers pasted on the porn actress. The username “deepfakes” uses open-source machine learning tools like TensorFlow, which is freely available on Google.

The Face2Face algorithm that can swap a recorded video with real-time face tracking has resulted in this new type of fake porn that manages to make fake videos of people having sex and saying things they never did.

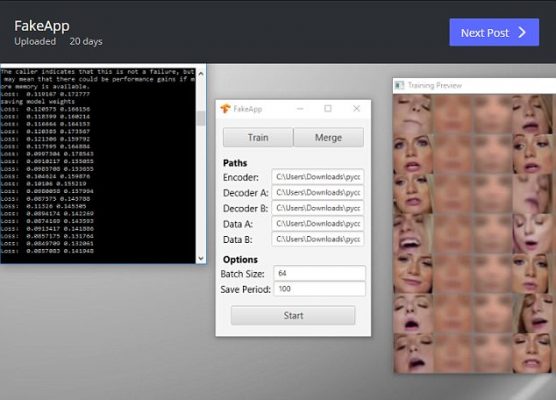

Speaking to Motherboard, “deepfakes” says that this whole celebrity swapping thing is not that difficult to do, suggesting that it is definitely going to be misused more often than not. The software the user has made – FakeApp – available to the public is based on multiple open-source libraries, like Keras with TensorFlow backend. To compile the celebrities’ faces, “deepfakes” said he used Google image search, stock photos, and YouTube videos. Deep learning consists of networks of interconnected nodes that autonomously run computations on input data.

The law concerning these celebrity face-swaps gets a bit dodgy, with The Wired doing a piece on the legal courses. Since it isn’t a celebrity’s body in the porn, it becomes difficult to pursue as a privacy violation case. Platforms could ban the images or communities for violating their terms of service, as Discord did. But section 230 of the Communications Decency Act says that websites aren’t liable for third-party content.

However, private citizens are likely to have more of a legal advantage in these situations than celebrities because they aren’t considered public figures. But again, the misuse of this technology outweighs the positives behind it, despite initiating a dialogue on privacy and such a technology.

Feature Image: Reddit